On Nov. 1, I pitched a column about effective altruism. I had no idea what was going to happen next.

One of effective altruism (EA)’s most prominent adherents is Sam Bankman-Fried. Last week, his net worth went from $16 billion to zero, and he stepped down as CEO of the cryptocurrency exchange FTX.

FTX and Bankman-Fried are under investigation from the Securities and Exchange Commission as well as authorities in the Bahamas, where his companies are based. It’s a disaster.

Bankman-Fried’s parents are law professors who studied utilitarianism, a branch of moral philosophy that argues moral action is the action that will lead to the greatest happiness for the greatest number of people. After college, Bankman-Fried pursued “earning to give,” in which you make as much money as possible to donate it. In April, Bankman-Fried pledged to give away 99% of his then-fortune.

EA is a movement built around the goal of being thoughtful about what the best way to do good in the world is. Most, but not all, effective altruists are utilitarians. The movement also entails some pretty radical ideas about what people should be donating to. For instance, one EA organization, GiveWell, doesn’t recommend any charities based in the United States.

The most controversial part of EA, though, doesn’t have anything to do with the charities GiveWell recommends, which do things like distributing vitamin A supplements and anti-malaria medicine.

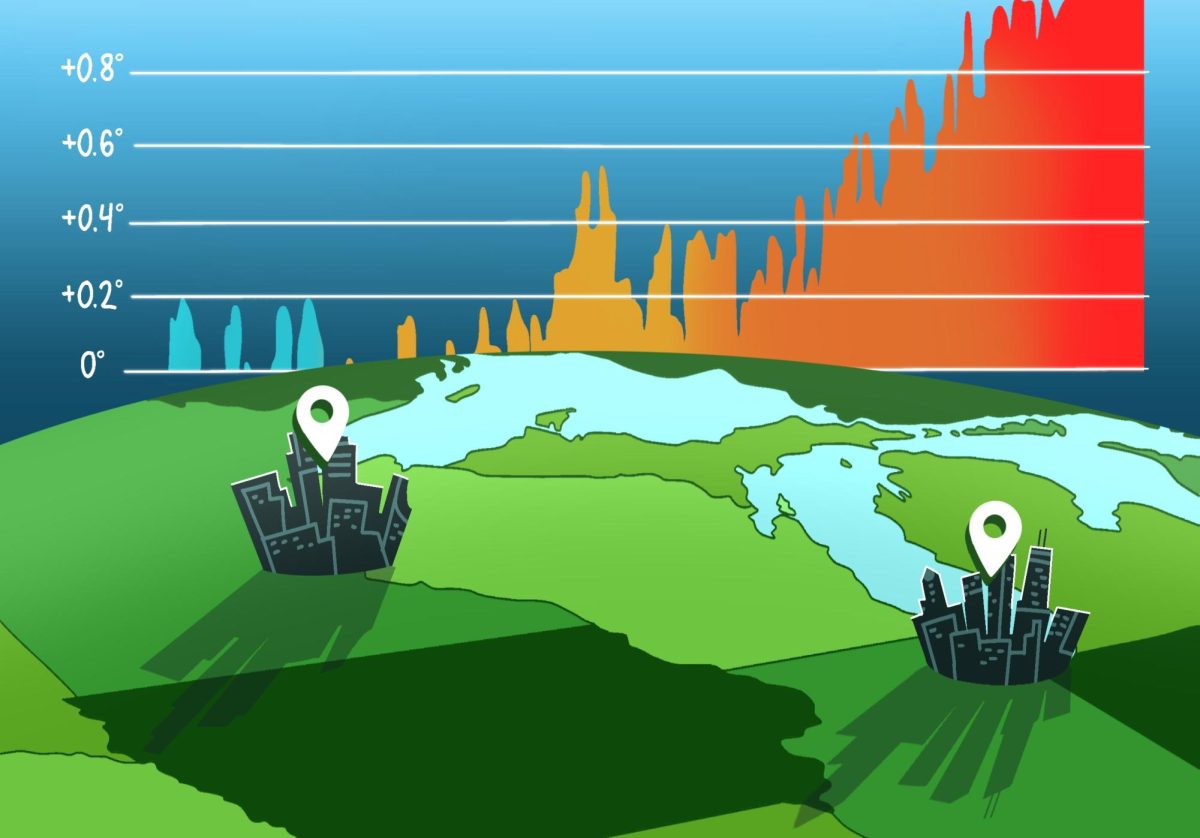

This controversial idea is longtermism. If everyone is equally valuable, longtermists say, we should care about people in the future as well as people in the present, and there might be a lot of people in the future. Longtermists say 99% of humans dying is nowhere near as bad as 100%, because the remaining 1% will still be able to rebuild. So, they focus on existential risks that could kill 100% of people, rather than, say, climate change, which according to longtermists, will kill lots of people but probably not everybody. Although, some scientists think climate extinction is under-studied.

There are some longtermist causes that I like, such as preventing nuclear war and preparing for pandemics. I wouldn’t have written my own column on pandemics if not for EA.

But where I disagree is artificial intelligence (AI), which “has been a dominant focus in EA over the last decade,” according to Vox. While longtermists are worried about some shorter-term risks, such as discrimination and privacy violations, a big focus is the idea that runaway AI could kill or enslave all humans. A research analyst at the EA organization 80,000 Hours wrote, “I think there’s something like a 10% chance of an existential catastrophe resulting from power-seeking AI systems this century.”

“Personally, I am not worried about it, even though it’s possible,” Maria Gini, an AI expert at the University of Minnesota said of existential AI risk.

What she is concerned about, however, is poorly designed AI.

“I’m kind of concerned there will be a lot of bad AI software that will claim to do things, but in fact, that is buggy, that makes mistakes.” For instance, she said, if the power grid relied on faulty AI, that could be very bad.

While AI is nothing to sneeze at, it’s also not a massive existential risk that could justify redirecting resources from, say, alleviating global poverty.

Fighting global poverty is the EA cause that appeals to me most, and EA has influenced my past writing on the subject. Among the highest-quality evidence on anti-poverty programs prized by effective altruists are randomized controlled trials. According to Jason Kerwin, an applied economist at the University, they owe this to the “Randomista” movement, which pioneered “the widespread adoption of randomized controlled trials to study development interventions.”

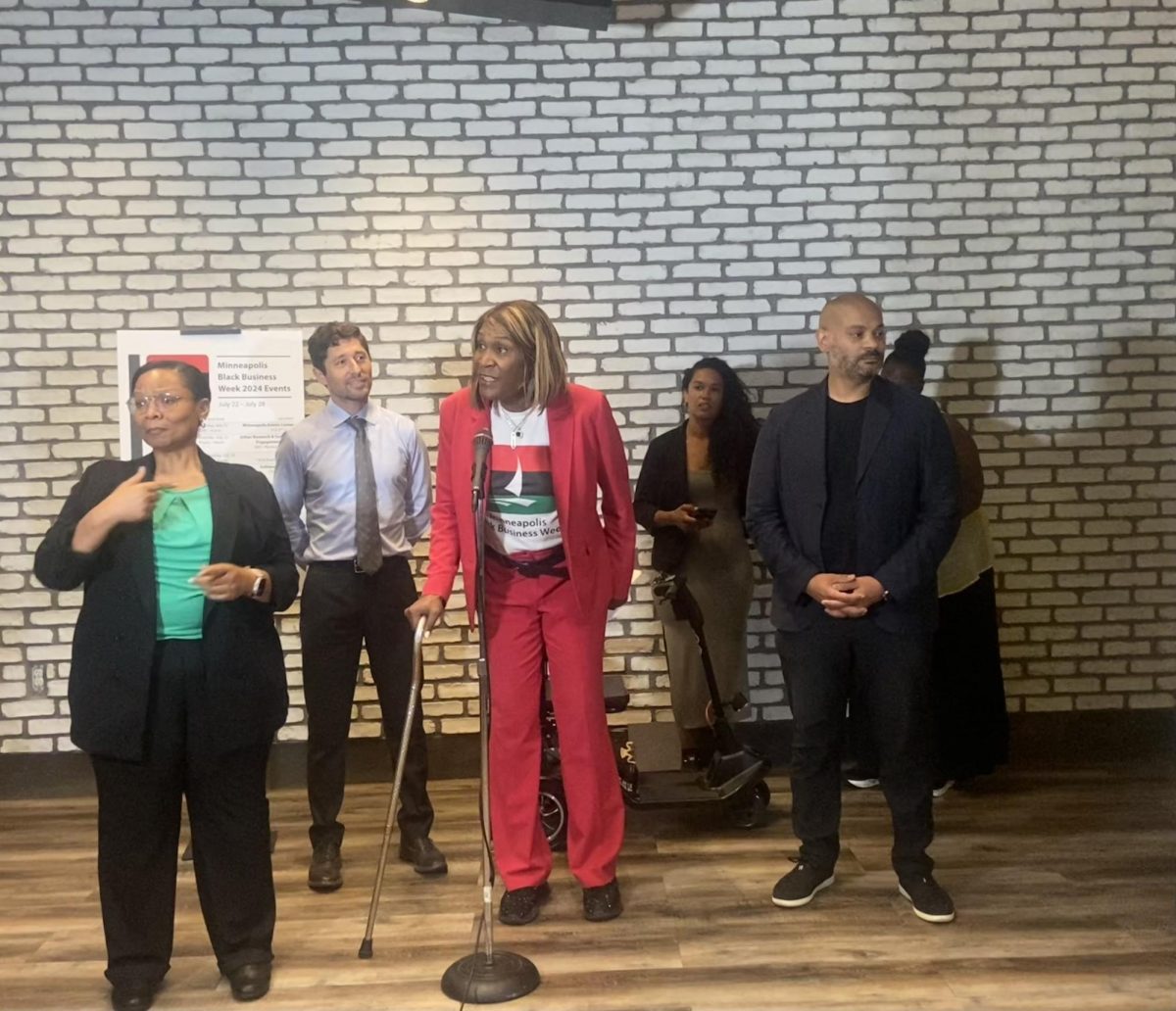

If your movement claims to care about everyone in the world equally, it helps if it’s actually representative of that world. According to one member of the EA community, who counted staff profiles on public websites of longtermist organizations, they are overwhelmingly white.

This member, who works at an EA organization and spoke to the Minnesota Daily on condition of anonymity for fear of professional repercussions, said while they haven’t researched it, their impression is that the staff tend to be more diverse at non-longtermist EA groups working on things like animal welfare and global poverty.

This member also said for EA and especially longtermist organizations, “founder effects” are a problem.

“Having been a part of EA for a long time, being trusted by EA leaders, being viewed as ‘part of the EA community’ all contribute to someone’s likelihood of being hired, all of which make it more likely for employees to ‘look like’ the people who were originally in EA,” they said.

“The recent failure of FTX is an egregious example of drawbacks of … utilitarianism,” Lia Harris, an employee at a medical NGO in Yemen, wrote to the Daily via private message on an online EA forum. Harris’ view is her own and not necessarily that of her employer.

Focusing on longtermism, Harris said, also illustrated these drawbacks. “Current people existing now are dying of poverty while EA is funding distant future people,” she wrote.

One thing I do admire about EA is many of its adherents make concrete personal sacrifices.

I talked to Max Gehred, president of Effective Altruism University of Wisconsin-Madison and an intern at the Centre for Effective Altruism. He said because of EA, he changed his career plans from teaching physics to working on public policy. He’s also signed the Giving What We Can pledge, a secular tithe in which people give 10% of their income to effective charities.

“I went vegetarian as a result of some of the ideas I encountered through EA,” he said. At the most extreme end, some EAs have even donated their kidneys to strangers.

Another thing I appreciate is EA’s attention to neglected causes. One way to ensure you’re making the biggest impact is to pick something few others are working on.

So where does EA stand? Certainly, Bankman-Fried’s downfall removes a big source of funding and may cause some in EA to reconsider some things.

“Sam and FTX had a lot of good will — and some of that good will was the result of association with ideas I have spent my career promoting,” wrote EA philosopher Will MacAskill. “If that good will laundered fraud, I am ashamed.”

But, there’s a lot more to EA than cryptocurrency and killer robots. Thanks in large part to GiveWell, the Against Malaria Foundation has raised hundreds of millions of dollars.

I hope, going into the future, effective altruism can focus more on helping the world’s most vulnerable people and animals, as well as preventing real risks that we know exist. This might not be as fun for crypto hucksters, but it will be more effectively altruistic.